Structure from Motion Knowledge Tree

in 3D Vision

Knowlegde Tree for SfM

This post is about the fundamental knowledge and concepts in the 3D Computer Vision field. The knowledge points of this post is mainly base on the book An Invitation to 3-D Vision SpringerLink. I will try to rephrase the knowledge using my words and combine with some other knowledges.

- Linear Space

- Lie Algebra

- Image Formation

- Image Correspondence

- Epipolar Geometry For Calibrated Views

- Epipolar Geometry for uncalibrated views

- Calibration

- Epipolar Summary

- Step-by-step build of a 3D model from Image using Uncalibrated camera (P375)

- Appendix

Linear Space

- Linear space / Vector space: Given any vectors within the space, they are always closed within the space under any linear combinations.

- Subspace: A subset of the Linear space. Also it is linear closed and contain the zero vector

- Spanned subspace: Expand a subspace to a bigger vector space which contains all possible linear combinations of the original subspace.

- Linear independence: A set of vector is linear independence when the linear combination of the vectors only equal 0 when scalar parameters are 0s. E.g. One-hot encoding.

- Basis: A set of linear independent vector and they can span to a linear space.

- The number of the basis is always equal the number of the dimension of the spanned linear space.

- The change-of-basis matrix: There are 2 scenarios:

- the new basis is wrote in the linear combination of the original basis.

- In this situation, the original basis is consider as the standard basis. So the change-of-basis matrix can be just expressed as: each new basis vector as each row. So the using this change-of-basis multiply the original coordinates means projecting the old coordinate to the new basis. A example usage is the TBN matrix in the Graphics to convert the Tangent space normal to world space.

- In comparison with the Rotation matrix, which is put the new rotated axis in the old coordinate basis as the column of the rotation matrix. The result of the rotation is still under the original basis. This can be understood as re-calc the contribution of each scalar along the original basis under the new rotated axises. The change-of-basis and rotation matrix are both SO(3) and mutual inverse.

- Another case is when the new basis and the original basis are expressed of another fundamental basis. Then the change-of-basis is (B_new.inv() * B_ori). And for each B, put the basis on the column.

- the new basis is wrote in the linear combination of the original basis.

- Inner Product:

- Orthogonal vector inner product is 0.

- Denote the angle difference of 2 normalized vector. The bigger difference, the smaller value

- Use for project 1 vector to another

- Determine if the direction is shooting forward the plane normal or into the plane normal (if value > 0)

- Cross Product (assume right-hand system):

- Self cross product is 0

- Determine a point is on the left or right of a vector. Moreover, we can determine if a point is within the triangle. (Rasterization)

- Determine the triangle is CCW/CW wind

- Use skew-symmetric matrix to transform the cross product calculation to a matrix multiplication.

- Any 2 non-parallel vectors’ cross product can describe a plane’s direction.

- Group: A closed set of transfer functions. Notice that there is no Commutative Property for the Group.

- General Linear Group GL(n): det(A) != 0

- Orthogonal Group O(n): A.inv() = A.transpose() and det(A) = +1/-1. Rank(A) = n.

- Special Orthogonal Group SO(n): A is O(n) and det(A) = +1. This is because of right hand system.

- Affine Group A(n): [A, b; 0, 1]

- Euclidean Group E(n): T is Affine group also A is orthogonal group.

- Special Euclidean Group SE(n): If A is further a SO(n). Then it is a SE(n)

- Euclidean Group E(n): T is Affine group also A is orthogonal group.

- Orthogonal Group O(n): A.inv() = A.transpose() and det(A) = +1/-1. Rank(A) = n.

- SO(n) is O(n) is GL(n)

- SE(n) is E(n) is A(n) is GL(n+1) (Homogeneous coordinate)

- General Linear Group GL(n): det(A) != 0

- Gram-Schmidt Process: Given an arbitrary set of basis of a GL(n), we can always transfer them to a set of mutual orthogonal basis. This is done in interation by always deduct the component of projection to the existing orthogonal basis. Gram–Schmidt process - Wikipedia

- QR Decomposition: Any matrix can be decomposed to A = QR. Gram-Schmidt Process is a kind of QR Decomposition. Q is an orthogonal matrix and R is an upper triangular matrix. QR decomposition can be used for solving least square problem, no matter the A is under-determined or over-determined.

- Eigen Decomposition: Eigen vectors are the direction of transform. Eigen vector and Eigen value can be used to decompose a matrix. And also can use for solving inverse, however not recommend to use Eigen Decomposition to solve linear equation.

- SVD

- The singular values of a M x N matrix A are the square roots of the eigenvalues of the square matrix A.t() * A.

- Also det(A) *= singular_value[i++].

- Solve a Linear equation Ax = b:

- A is non-Singular det(A)!=0:

- Calculate A.inv()

- Cramer’s rule

- A is Singular:

- Pseudo-inverse using SVD

- LU decomposition

- QR decomposition

- For Mx = 0, calculate the eigen vector of the (A.t() * A). The solution is the eigen vector corresponding to the smallest eigen value.

- For Mx = 0, SVD, the solution is the singular vector corresponding to the smallest singular value.

- For Mx = 0, force the first order partial derivative to be zero. Jacobian matrix to be zero. Might get local minimum.

- Non-linear gradient descent. Might get local minimum.

- Eigen: Linear algebra and decompositions

- Eigen: Solving linear least squares systems

- A is non-Singular det(A)!=0:

- Jacobian matrix: first order partial derivative matrix, used as the gradient direction in the non linear optimization. Hessian matrix is the second order partial derivative matrix.

Lie Algebra

- Lie group is the SE(3), Lie Algebra map the Lie Group to the exponential coordinate space, which is more easy to calculate derivative and has meaningful add operation. Just like the Cartesian Coordinate System and the Polar Coordinate System. They both represent the same point but in different format.

- Rodrigues formula: Map AngleAxis to Lie Group SO(3). There is another formula using the Trace of matrix to map the SO(3) to the AngleAxis. Moreover we can use AngleAxis to calculate the corresponding mapped value in Lie Algebra: phi=skewsymmetric(w=theta*axis). The direction of the w is the axis and magnitude is the angle. So we now know how to convert between Lie Group and Lie Algebra. (Detail check Slambook chapter 4)

- Homogeneous Coordinate: Can covert the linear combination to a simple matrix multiplication.

- Lie Algebra for SE(3)

- Later when we talking about the Gradient to the Camera pose [R,t], we are talking about the Jacobian of the camera pose on the Lie Algebra.

- P37: Summary

Image Formation

- Image through lens. Fundamental equation: P48

- Image through pinholes.

- Intrinsic, The difference between the Projection matrix in Graphics is: Intrinsic K is modeling base on the pinhole camera. K mapped the normalized Camera space position (divided by Z) to pixel coordinate. Projection in graphics map the camera space position to a 3 dimension clipped frustum. Then rescale the frustum to NDC space.

- Extrinsic, is the camera pose relative to the rig pose.

- Radial Distortion coefficient

- Rendering Function from the Image Sensor perspective: P69

- Coordinate transfer flow: P_world -> (mul pose) -> P_rig(If is rig) -> (mul extrinsic) -> P_camera -> P_camera_normalized_depth -> (mul intrinsic) -> P_uv

Image Correspondence

- Optical Flow: Brightness Constancy Constraint (4.14). A strong assumption that assume the camera or the pixel on the image is moving very slow. So the delta of a pixel’s greyscale is equal to the image gradient at last frame along X and Y axis, times the delta t. Moreover, we can select a m*m window in the image and apply this constraint to each pixel. Then we can form an over-determined linear equation. Solve that can yield the speed of the pixel change. Formula (4.20) is the linear equation for the pixel speed.

- Algorithm (4.1): denote an algorithm for compute the pixel displacement with either feature tracking or Optical Flow. The main difference is that

- for feature tracking, the target points are selected corner points (This method also known as the 8-Point algorithm for solving the Epipolar constraint).

- For the Optical Flow, we just need to detect the feature point at the first frame, then use the optical flow to tracking them and solve for the camera movement. This cam save us a lot of computation on Feature detection and matching. (This is also known as LK Optical Flow)

- Feature Point Detection: Here the book mainly talking about some traditional feature point technique. Morden feature detection include: HOG, SIFT, SURF, ORB and even Deep Learning.

Corner Detection: Algorithm (4.2). Harris Corner Detection is using the EigenValue to characterize the quality of the image gradient matrix. Shi-Tomasi detection: idea is similar to Harris but in different form.

- Line detector: Canny edge detection (Algorithm 4.3). More over, we can use the line segments (B-splines) to fitting the line base on the image gradient.

- Sobel Operator: Gradient kernel to convolution with the whole image to determine the image gradient along both X and Y axis for each pixel.

- SIFT + FLANN (TODO)

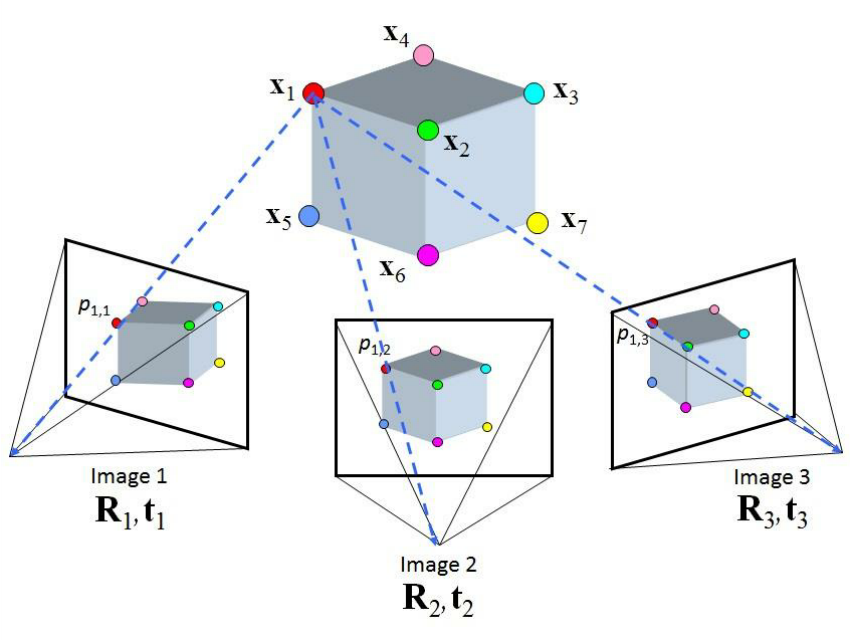

Epipolar Geometry For Calibrated Views

- Epipolar Constraint: Equation (5.2). Notice that for essential matrix, the x is the normalized Camera space coordinate [X_Z; Y_Z; 1]. For Fundamental Matrix, the p is the homogeneous pixel coordinate [u; v; 1].

- Other concepts:

- Epipolar plane

- Baseline

- Epipoles

- Epipolar lines

- Essential Matrix is E = skew(t) * R.

- Fundamental Matrix is F = K.inv().t() * E * K.inv().

- The eight-point Algorithm:

- Why 8 points? Because of Scale Ambiguity of Monocular SLAM:

- Because the equation to be solved is Mx = 0, which means x times any scalar factor, this equation still stands. So we can always normalize the x to set the last element to be 1. So that we have (9-1) degree of freedom. So only 8 points

- Another perspective of this problem is that due to Scale Ambiguity, we can always normalize the norm(x) to be 1. So that we have our 9th equation. We just need 8 more.

- Solutions, usually we will have more than 8 pair of matching points:

- Linear solution: Since this is an over-determined linear equation, we can use SVD then get smallest singular value or (M.t()*M)’s smallest eigen value to solve it. Smallest because ideally the corresponding Singular value should be 0.

- Non-linear Solution: reform the 8-points equation to become a least-square problem: argmin(x) such as (x.inv() * M.t() * M * x). Then we can use non-linear gradient descent.

- Or we can use the RANSAC, which is a cross-validation mechanism. Basically, we random select 8-point pairs and solve for a pose. And for all the possible pose, we calculate which one has least overall reproduction error. Then select that.

- Why 8 points? Because of Scale Ambiguity of Monocular SLAM:

- To recover R and t from E from 2 calibrated views. Assume already obtain the rough estimated E through the 8-points algorithm:

- However the E might not on the essential space (manifold). So we need to normalize (retroject) it on the essential space. This is done via SVD and set the diagonal singular matrix to [1, 1, 0] or [(d1+d2)/2, (d1+d2)/2, 0] (d1 and d2 are the two largest singular values). Since this is monocular reconstruction, the world space scale cannot be determine. So it is OK to assign 1 as the normalized singular value. Also notice that for valid essential matrix, the singular value format must be [t, t, 0] (Theorem 5.5).

- To obtain the R and t, we need to re-compose the SVD result of the normalized. We will obtain 4 results and test the triangulated point’s depth must be positive to select the final result.

- A complete Epipolar Triangulation (Algorithm 5.1)

- Related OpenCV APIs: cv::findEssentialMat, cv::findFundamentalMat, cv::findHomography, cv::recoverPose, cv::triangulatePoints, cv::projectPoints

- Using this Monocular SfM we can only can only obtain the normalized camera space coordinate. And will use the first keyframe’s camera space coordinate as the world coordinate. For initialize the Monocular SLAM, we need to have translation between the 2 frame. Then to build a map, ORB-SLAM normalized the median depth of the first keyframe’s feature point as 1 unit. Then we can obtained a normalized translation and other feature points’ depth, then we can starting building the map.

- Planar Scene and Homography:

- 4-points Algorithm and [R,t] decomposing: (Algorithm 5.2)

- In practice, when most feature points are on the same plane or the camera only have rotation, the Homography matrix works better than fundamental matrix. So usually compute both and select the one that has the lower reprojection error.

- Relationship between Essential Matrix and Homography Matrix: E = skew(t) * H. So when the t is small, the E degenerate to H.

- Continuous Epipolar Geometry for both Essential and Homography:

- Essential Algorithm 5.3

- Homography Algorithm 5.4

- Summary: P160

Epipolar Geometry for uncalibrated views

- Fundamental Matrix: Pixel coordinate constraint.

- Here the camera intrinsic K is unknown. When using Bundle Adjustment, we can add the intrinsic vertex to estimate through graph optimization.

- Stratified Reconstruction: with uncalibrated camera, we can only reconstruct up to Projective reconstruction: X_p = H_p * H_a * g_e * X. X is the true structure and g_e is the rigid body motion [R,t]. So the distortion comes from H_p and H_a.

- Projective Reconstruction P188:

- After obtain the Fundamental matrix using 8-point algorithm (P212), we can decompose it and use it to solve projective triangulation.

- Affine reconstruction: To obtain a Euclidean Reconstruction, we need to first upgrade the projective reconstruction to Affine Reconstruction

- Euclidean Reconstruction

- Projective Reconstruction P188:

Calibration

- Offline Calibration:

- With a checkerboard calibration rig/planar

- Calibration with Checkerboard P203:

- Detect the checkerboard corner points pixel coordinate on image

- Define a World coordinate system on the Checkerboard itself. Like the lower left corner is the origin and each square is a unit 1.

- The we have the linear equation:

- H = K * [R,t]

- cross(x_pixel * H * X_world) = 0

- Solve above we get H. Then denote S = K.inv().t() * K.inv(). {h1, h2} as the first and second column of H. Then we have constraint that h1.t() * S * h2 = 0.

- Solve for S then we can decompose K

- K has 5 DoF (with the skew factor) so we need at least 3 image to get enough linear equation to solve them.

- Calibration with Checkerboard P203:

- With a checkerboard calibration rig/planar

- Online Calibration:

- With partial scene information

- Calibrated with vanishing 3 points P199

- With partial scene information

Epipolar Summary

- P206-207

Step-by-step build of a 3D model from Image using Uncalibrated camera (P375)

- Feature Selection

- Feature Matching

- Feature tracking

- Optical Flow

- Brute Force

- RANSAC feature matching (P389)

- FLANN

- Feature buckets

- Feature tracking

- Projective reconstruction

- Two-view initialization

- Obtain Fundamental Matrix with Sampson distance refinement (P393)

- Projective Reconstruction (P395)

- Decompose F to [R,t] and get 3D structure

- Two-view initialization

- Euclidean reconstruction with partial camera knowledge (P401)

- Camera Calibration

- Reconstruction with partial object knowledge

- Use prior or semantic information to help reconstruct the 3D model

- Visualization

- Rectify the Epipolar constraint (minimize Sampson distance)

- Sparse Feature points to dense mesh triangles

- Texture mapping

Appendix

- Kalman Filter and EKF (P468)

- Non-linear optimization (P480)