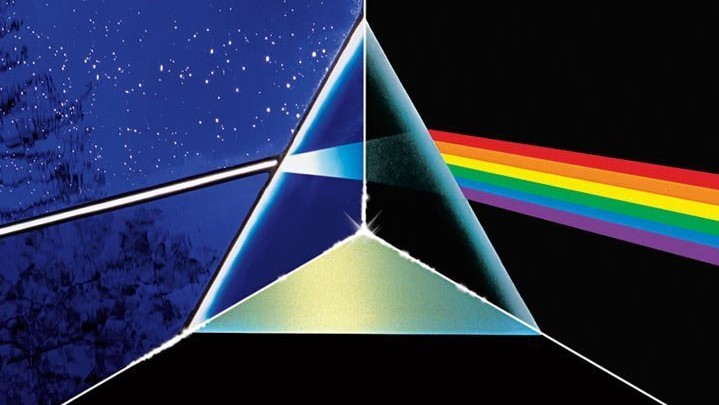

The Dark Side of the Deep Learning

Is Deep Learning the right path to ultimate AI?

Changing from 0.1f to 1.f is always easier than changing from 0.f to 0.1f. So for the first blog, I decided to translate a small article I wrote before into English and add some new thoughts. It is about my personal understanding of Deep Learning and its ethical influences on human civilization. Hope this can give you some heuristic ideas.

- Deep Learning is not the true AI

- How Deep Learning really works

- Accommodation to machine

- Reveal the brutal truth of dark AI

- What should Deep Learning do and what should not

Deep Learning is not the true AI

Just giving you a heads up of the tone for this article: I am pessimistic about this Deep Learning technology and I think it is diverging human beings from the path of inventing the true Artificial Intelligence. I am not totally opposed to DL but just think it is a superficial solution for the true AI problem. I was once passionate about this new ML technology and have tried to apply it to all the medical image processing tasks I was doing in the research lab. Needless to say, the results were promising and amazing. It can automatically seize the hidden patterns of the dataset without understanding what the data really is. If we want to improve the result, just adding more layers and more neurons. You can see the performance is positively correlated. However, when I stepped out of the lab and started gazing at the real intelligence, like the kids learning language and playing games. I am starting wondering is this technology giving machines the ability to perceive the real world, or is it just a brute-force method to torture the computer to iterate through huge amount of information, just like the infamous “Ludovico Technique” in the A Clockwork Orange? Knowledge is thinking inside the box. Intelligence is thinking out of the box.

I am going with the latter. The argument is simple: If you show kids less than 10 images of the cat, they can identify a cat on the street the next day. They can even sketch a cat-like figure on paper. So the real intelligence entities don’t learn from a huge amount of data, but a small chunk of information is enough to stimulate the brain to memorize the pattern. They may still make mistakes. Kids might say a tiger is a big cat. But this level of mistakes is tolerable. We can very easily train the kid into a robust cat predict system. While comparing with Deep Learning, we have to throw tons of images, 20% positive, 80% negative, then we might get a model that still thinks a cookie is a chihuahua. So this process is unnatural and the true intelligence we are trying to simulate is not generated from this kind of brute-force way. Also as long as we are training using data set, the model is always overfitting, just to different extents. I think the world’s most robust car predicting model can still fail on the image of the alien truck by Tesla. The real AI that we are all pursuing of, shouldn’t working this way. Also AI should be expand-able, like human brain which can continuely learning.

How Deep Learning really works

My understanding of the traditional structured Deep Learning method is a brute-force way to approximate the real model for the given task. And the training process is a non-linear cost function optimization, solved using gradient descent, which is a typical solution for other modern computer vision problems like SLAM. DL uses a massive amount of parameters and complex data transform to fit the curve. It is cheap in the meaning that we don’t even need to analyze the type of the inner model. A lot of techniques introduced like pooling, ReLU or Dropout, just for breaking the linearity aspect of the math model and add more randomness to the model, so it has a better chance to get a close result. This huge-amount-parametric method is just a cheap way to simulate the real underlying model.

Accommodation to machine

Let’s still step back and think about a more practical problem: how much longer will we have a generalized reliable AI in our daily life? AI was portrait as the next technology revolution. Look at the innovations that really change human civilization. Inventors started designing cars around the 17th century, and it took almost 300 years to make cars become an affordable common item in society. AI is much more complex than cars and we are still early stage and haven’t even defined what AI is. So we can set a bold assumption here, maybe the AI doesn’t exist, or there will be a smart scientist proves that we need to faster than light to create AI. So I think we really should think about what if true AI is just a fantasy and humans can never create it.

Throughout years of the skeptic to DL, I start generating some ideas of Left-wing AI Accelerationism. Accelerationism means that in order to achieve the goal, we can do whatever we want, even those consequences are negative to the other areas. This is a dangerous and selfish thought. But really productive and can improve humans’ life. The idea is if it is hard to make machines understand humans, then why not make humans accommodate to machines. If error-less NLP is hard to achieve, then we can invent a very logical and formatted language with a well-defined grammar that machine can easily understand. Then we just make every kid learning this language and eventually humans can freely talk with the machine. Similar ideas also apply to other aspects like microchipping all the human and product so that machines can directly recognize the object. Although this sounds crazy and impractical. But think about the history of car again. Before cars were introduced to society, people can walk anywhere they want. There was no traffic light or even the concept of the pedestrian. However, once cars started to appear on the street, people have to learn the rules. People have to accommodate to the machine. The government created laws to redefine humans’ behavior on the streets and this expedited the revolution of the automotive industry. So why don’t we also create new rules to accommodate the AI?

Think from the other side, will you ever allow AI makes a decision for you? I don’t think people will ever fully trust the AI. The pride and prejudice of human being that baked inside humanity always remind us that machine is a tool, it will not tell us what to do. Since AI is always more foolish than human and cannot solve problems that human cannot solve and nobody rely on AI to make decisions, then it is not significantly meaningful. If so why don’t we accelerate the creation of AI? Accommodating to the machine is the fastest and simplest way that we can create workable AI in daily life. Then with this powerful tool, the human being can more focus on the problem that are meaningful, like the ultimate understanding of the universe or how to live immortally. AI cannot give the answer to those problems at all. Only the human being is the key to all the locks in the world.

Reveal the brutal truth of dark AI

So this Accelerationism definitely will have a downside to our life. The creation of the fake AI will make the human being loses humanity and culture. We will speak the same language, share the same chip, make music that can be enjoyed by both human and AI, decorate our room with QR code, OTA the chip inside our body or the robot will fine us, etc. The fascinating part of the world is that we are all different. All of the cultures are diverse. This is the best part of us but worst for the machine. The high uncertainty and information entropy make the machine cannot have a reliable prior probability estimated. So the AI problem really is not a technology problem. AI will become a controversial paradox of the new order and old tradition. A pandora’s box that can open a door to the new cyberpunk world and sacrifice the beautiful humanity and various of cultures.

Once you start viewing AI like this, you will understand the statement made by Elon Musk that: “AI will be the biggest threat to the human being”. It was sound funny before you read the article, right? The threat will not come from AI, but from our eagerness of evolving the human civilization and expanding our territory. Only we can destroy us.

What should Deep Learning do and what should not

Finally, I want to express what we should deal with this current Deep Learning technology. I have to admit that it is a great data processor. It working very well on some practical subjects. We can definitely use it to do some non-critical entertainment tasks like super-resolution, photo-synthesis, language translation, etc. The DLSS provided by Nvidia is a good example. I am imagining it is not trained with too much data to avoid overfitting. This type of task is very efficient when using DL. And it is OK for it to give error. Other generative learning processes like GAN and Autoencoder also cannot really hurt us, but add more fun in the entertainment aspects of our life. Therefore, AI shouldn’t play major roles in tasks like classification, medical, stock market, or self-driving. These areas require a strong decision-maker. AI just doesn’t have the ability to do so.

If you find yourself reading this article in the 25th century and human being still speaks English, not some funky machine language, please leave a comment below to point out if I was wrong or correct.